The Problem with Facebook. And How to Fix It.

- Chris Lloyd

- Mar 6, 2021

- 6 min read

Chris Lloyd is Professor of Statistics at Melbourne Business School, University of Melbourne.

In his Sunday Telegraph column last weekend, Daniel Hannan complained that Facebook cancelled advertisements promoting his article for the John Locke Institute's News & Opinion.

The most likely explanation is that people who dislike Lord Hannan, or his opinions on Brexit, or his political party, or all of the above, reported the ads to Facebook, alleging that they constitute a kind of political advertising, for which Facebook has imposed more onerous (and not entirely transparent) rules. Neither the advertisement nor the article itself is party-political, but you can see quite easily why people of a certain ideological persuasion would prefer not to listen to Lord Hannan's voice. If they can get away with it, they might try to stop the rest of us from hearing it too.

"Our society is part of a world-wide experiment."

This reveals a serious problem with Facebook. The big problem with Facebook, though, is not that they censor free speech according to their own quixotic principles. It is not their market domination. It is not that they are displacing the mainstream media. It is not that they fail to remove hateful garbage. It is not even that they video-cast murders once or twice each year.

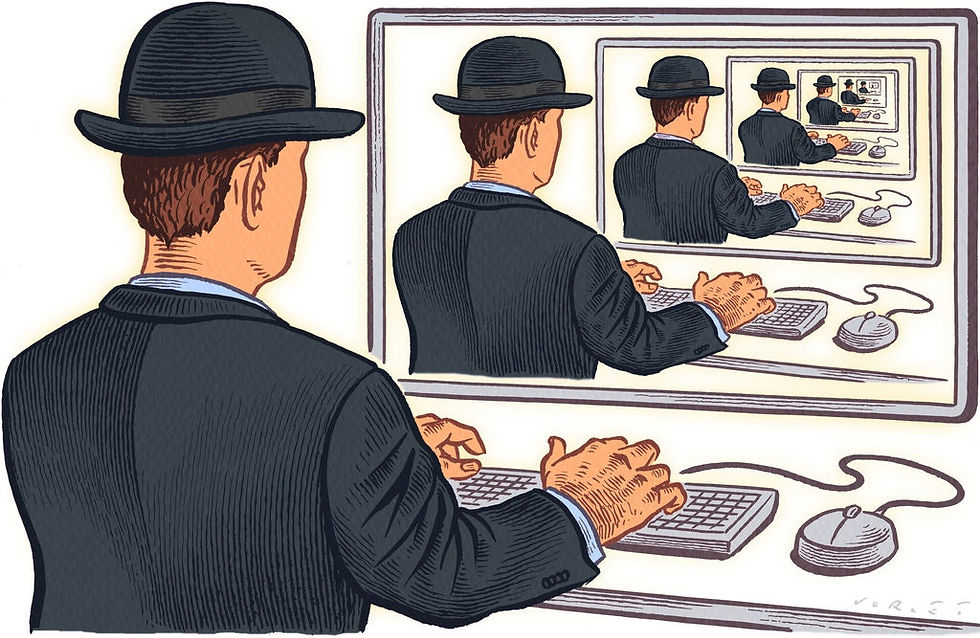

The problem with Facebook is their central business model: We will keep you on-line for as long as we can, using any psychological tricks that our social scientist consultants and data scientists have shown will work. If we can do that then we can maximise the value of selling you to our advertisers.

The psychological tricks, it turns out, mainly come down to telling people what they want to hear, making sure that they never hear a dissenting opinion and rewarding them with likes. The Facebook bubble feels pretty good for those who crave validation. It is the ultimate “safe space”, though left leaning folks usually mean something else when they use this term.

I make a special effort to subscribe to groups and individuals who I will likely disagree with. But unless I can get them to engage with me the Facebook algorithm will stop showing my posts to them or their posts to me. Our entire society is part of a world-wide experiment that never required ethics approval and would certainly have been denied approval by any committee on which I have served. The subjects are manipulated without being told how, and their private data is sold to private companies. And there are around 2.7 billion active users. That’s a lot of lab rats.

So, what is to be done?

*****

The Fairness Doctrine

In 1949 the US Federal Communications Commission (FCC) introduced a policy, the ‘Fairness Doctrine’, which required holders of broadcast licences (i.e. TV and radio) to air contrasting views on controversial issues of public importance. It was upheld by the Supreme Court in 1969 but was eventually abolished by the FCC, in 1987, under the influence of Ronald Reagan. An attempt to reinstate it by a Democrat-controlled Congress was vetoed by Reagan. It was perceived that the doctrine was, in practice, anti-conservative.

Some have argued that this event was the beginning of the partisan broadcast media. Notwithstanding the difficulty of administering a requirement of fairness, I think that it is hard to argue that abandoning the principle, even as an aspiration, would have had no effect on media culture. Rush Limbaugh’s influence seemed to intensify and spread at exactly this time. He would not have been able to broadcast his egregious and deliberately provocative brand of political commentary before 1987. Indeed, he got fired from his first radio job at KQV in 1974 for over-stepping the mark.

Was Fox News also a consequence? Some might argue not, because as a cable network they are not considered a broadcaster and would not be subject to the doctrine. This misses the point, however, that if it had not been scrapped the doctrine could, and surely would, have been extended to other media channels; if only there were support for the principle.

How does this apply to Facebook in 2021?

The Fairness Doctrine required media to “air opposing views on controversial issues”. While Facebook is not a media outlet, there is no reason to limit the principle to the rapidly declining media. It is the principle of diversity of opinion that is important. The culprit is the algorithm and it cannot be allowed to continue. We cannot suffer this abomination to infect, brainwash and ultimately control every poor sod who is on-line, not with one ideology of course, but with a whole range of equally deranged ideologies. The MAGAs are as brainwashed as the Flat-earthers, the SJWs, the BLM true believers, and the White Supremacists - all with a barrage of alternative facts from alternative universes - while 36-year-old Mark Zuckerberg, in his grey T-shirt, pretends to apologise.

There might be only a few years left when governments have the agency to intervene. Governments need to pass a bill requiring social media platforms that reach a sufficient market to have their algorithms compulsorily modified according to the Fairness Doctrine.

The application of the Fairness Doctrine to the algorithm would be as follows: Facebook are easily able to classify people based on their networks. There is the left-right dimension, of course, but there are also finer ones: the dimension for authoritarianism; individual versus community; materialism versus spiritualism; national versus global; race-blind versus anti-racist. Facebook is already using this kind of machine learning technology. That is how they target the ads and the posts that appear on your news feed. You are a data point in multi-dimensional space. So is every user; and Facebook can see who you are connected to, and in what way.

The modified algorithm would deliver contrasting views to your news feed. Maybe there are four dimensions, implying sixteen combinations. You get a feed from the Guardian on refugees because you subscribe. I say that your feed should automatically attract posts from the other fifteen viewpoints. You should see a post from the Telegraph on wind turbines killing birds. Then one on Islamic fundamentalism. Then one on Chinese soft power. Then a post from your weird Flat-earther Facebook friend. You get the idea.

Will users read these posts? They can be required to open them to see anything else new on their newsfeed. No more cat videos or photos of their friends until they look at the wind turbine story. The algorithm might require the page to remain open for at least sixty seconds. Facebook can monitor this with cookies. They cannot guarantee that you read it, of course, but they can cajole you into it. This is all possible when one considers what Facebook does now in the pursuit of brainwash-induced profit.

Many people might leave Facebook for the dark web. But most current users (like you and me, I suspect) who mainly post holiday snaps or communicate with old school friends about reunions would not. Nor would those who want to use Facebook to be truly informed about current affairs or to communicate their ideas about current affairs. I would really welcome the automatic delivery of diverse news, professional comments and Facebook friend comments onto my feed, because trying to orchestrate this diversity myself is swimming against the tide of Facebook’s current algorithm.

So who would impose this? Which government would dare to declare war against Facebook?

I cannot see it happening in the US. There is too much suspicion of government. Any imposition of diversity would lead to further insurrections (though I hope it is clear from my explanation that I am talking about real diversity, not the distorted modern version of this term). As much as the heavy regulatory hand of the EU worries me, I wonder if they might not be uniquely placed to drive a stake through the heart of the monstrous algorithm that is doing so much damage to the world. The EU is big enough for Facebook to comply and the politics would play out much better there than in the US. I am not a twitter user so I do not know how this plays out on that and other platforms. But the principle is the key: Private companies have no right to create a widely used space where people are deliberately corralled into tiny islands on the political map, where bad ideas are protected from being challenged, all for the trivial purpose of keeping them on those tiny islands for venal profit.

"The EU might be uniquely placed to drive a stake through the heart of this monstrous algorithm."

Is it censorship? No. While it would be imposed by government regulators, I hope it is clear that it is not government censorship. For a start, nothing is banned over and above current limitations. More importantly, strong support for a current government will be exposed to anti-government views by the algorithm. Does it require judgement of what is fair and unbiased? No. It utilises an empirically revealed definition of diversity of news and comment. Users are forced to be exposed to the existing space of ideas. Will it force users towards a view? No. It would automatically draw users towards the empirical middle of the Facebook universe while making them aware of other parts of the universe.

In the spirit of this article, I welcome comments from those who disagree with me.